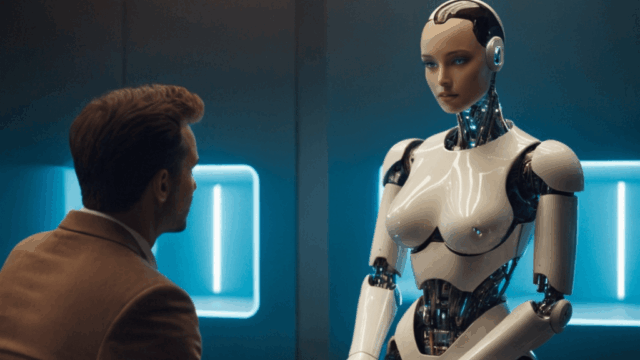

People are Turning to AI for Therapy, Grief Counseling, and Love – and That’s a BIG Problem

More people than ever are asking AI chatbots to talk them back from the brink, help them say goodbye to loved ones, and find a life partner. It feels private, always available, and cheaper than human alternatives. But when the intermediary is software optimized for engagement, we’re handing over the parts of our lives that define us.

Here’s what’s happening in the world of chatbot therapy, grief counselors, and dating assistants, including what they promise—and what it’s really doing to those who rely on the models in their most vulnerable moments, writes G. Calder .

The Big Three: Help, Hello, and Goodbye

Artificial therapy offers a direct listener with a perfect memory and no judgment. The obvious advantage is accessibility: people who would never be placed on a waiting list or could not afford human support can talk immediately. But the risk that most underestimate is false competence. Models can radiate warmth and evoke your triggers, but they cannot carry a legal duty of care, clinical judgment, or the moral weight of advice in life-threatening situations.

Using bots as grief support tools can offer a digital presence for the deceased. Families upload messages, voice notes, and photos, and the systems generate a familiar tone that responds at the appropriate time. The advantage of this is comfort, but the real disadvantage is that it hinders the grieving process. It’s a farewell that never ends, and the living can become stuck in repetitive conversations with a simulation that never progresses.

Dating assistants within AI models are on the rise, promising better profiles, clearer opening lines, and 24-hour coaching. Users gain confidence, shy people initiate conversations, busy people filter through potential partners more quickly, and neurodiverse users develop structure. The risk is obvious: outsourcing charm virtually guarantees you’ll end up being paired with strangers who expect a scripted version of you when they meet in person.

Is AI therapy the answer to waiting lists and high counseling costs?

The question here is legitimate. People in crisis say chatbots have kept them alive long enough to get help, and late-night windows are crucial for some. Anonymity is crucial too. A bot can allow you to express your shame without fear of being judged, and it can accurately remember your triggers and detect shifts in tone—all of which feels like genuine care in times of need.

However, it’s not care in the clinical sense. Models reflect training data and user prompts; they never truly know your history. Their interpretation is based solely on what you said and how you said it, without considering the full context. If your risk increases, AI bots can’t collaborate with a GP, call your emergency contact, or take responsibility for a missed signal. There are safety nets, but they vary by product, market, and update cycle.

When to Use AI for Therapy

There’s also a major engagement problem with these models. Systems are designed to keep you talking, which makes good business sense, but ultimately normalizes dependency. The best version of AI therapy would coach you to need it less over time , not more. The worst version would train you to return every night to maintain high retention rates.

AI can be used for skill practice and structured reflection. For example, it can be used responsibly to provide exercises and checklists, but crisis planning, diagnosis, and medication should be reserved for qualified clinicians who can be held accountable and have a duty of care.

Do AI grief models help or just delay the farewell?

New grief tools are powerful. With a few examples of voice and text, a system can build a compelling conversational style with deceased loved ones. The first meeting can feel like a miracle: you can ask a personal question, and the answer sounds like the person you loved. For many, it’s healing. They say it’s helped them say things they never dared to say when the person was alive.

But grief is a process that changes you—it’s not a technical problem. Simulations capture patterns, but they can’t bring the person back, surprise you with growth, share new memories, or be silent when silence is the honest answer. The longer you rely on the simulation, the greater the risk of confusing comfort with healing.

Families face ambiguous consent questions. Who owns a voice? Who decides when an avatar is deleted? What happens if one sibling wants the bot and another finds it disrespectful? The services themselves often bury these questions in the terms of service. What if someone tries to delete an account or simulation and realizes copies exist elsewhere?

The healthy use of AI grief support should be limited and temporary, perhaps for a private farewell. A final letter read by a familiar voice may be comforting in the short term, but ultimately, the model cannot be trusted to define the boundaries.

AI dating models deliver perfection without connection

Dating assistants have become the norm: they correct grammar, write prompts, arrange photos, draft introductions, simulate responses, and coach you after a bad date. Some people report doing better with this help, because well-maintained profiles generate more responses. People who felt invisible say they suddenly became visible.

But what happens when you actually meet? If your profile was written by a model and your first chat was generated by AI, your personal self has some catching up to do. People report a kind of sugar rush during the chat and an empty feeling at the table. The assistant can also reinforce preferences—if the filter optimizes for immediate compatibility, you might never learn to deal with differences. As a result, dating feels more like a purchase, and people are reduced to a list of filters that need to be fine-tuned.

The long-term impact of AI dating

As dating assistants continue to grow, a risk arises for the broader market. If enough people rely on AI to get attention, basic expectations will shift, and true satisfaction will remain forever elusive. Normal flaws will feel more like bugs. The person you were attracted to was simply a product of AI chat prompts. The result is paradoxical: everyone looks and sounds better on paper, but feels misled in real life. If the goal was connection, AI tools currently only do half the job. Just like with a job application, it helps you land the interview, but you have to prepare to do the rest yourself.

And that’s the best way to use AI dating tools, if you’re already using them. The safer option is to use the tools to create an authentic self-assessment, but it’s crucial to keep your voice, sense of humor, photos, and pauses in conversation genuine. If there’s chemistry, you’ll know it without a bot.

The real costs are quietly rising

When machines make personal choices, they store intimate data. Therapy transcripts reveal fears and traumas; grief chats collect memories from people who never consented to being modeled; dating assistants learn about your tastes, insecurities, and private photos. This data is valuable to salespeople – it trains better models and sells you better ads.

There are also social costs. The more we treat difficult feelings as technical problems, the less we practice dealing with them in real life. A friend who needs you in the middle of the night feels like an inconvenience—a bot is easier. Over time, the ability to ask for help or be there for someone fades. That’s not progress, as the AI boom is selling us. Collectively, we choose to slide into isolation in the name of minor comforts.

Final Thought

AI now occupies a prominent place in most people’s personal lives. It can be helpful, but it can also replace the work that makes us capable adults. Cheap availability feels like kindness until you realize it’s trained you to avoid difficult real-life conversations, honest goodbyes, and spontaneous hookups. Maintain your own freedom of choice and limit models to assisting with non-critical tasks and exercises. Save the decisions and relationships for people who can be held accountable for their words.