Anatomy of AI Cold Turkey

The problem with AI and the rest of Ultra-Processed Life is we slowly lose the ability to even grasp what’s been lost.

No, this title is not AI slop:AI cold turkey means there’s no AI monkey on your back: you don’t snort it, mainline it, consume it or use a skin patch. You’re done with that dependence and addiction.

I don’t use AI in my writing, or in any other way. To the degree that Google Search now displays an “AI overview,” I am exposed to this application of AI, but I can click on a primary link and ignore the overview, which is sometimes wrong. For example, a search for a local county council member listed an official who was no longer in office. The county website with the current office holders was the top link.

I want to explain my decision not to use AI, which can be understood as a kind of intellectual body with a specific anatomy. It’s not a decision based solely on ethics, though it’s already clear that AI is turning the Internet into a mixture of untrustworthy slop and malicious content. It’s not a Luddite reaction to new technology; I was an early adopter of personal computer and Internet technologies.

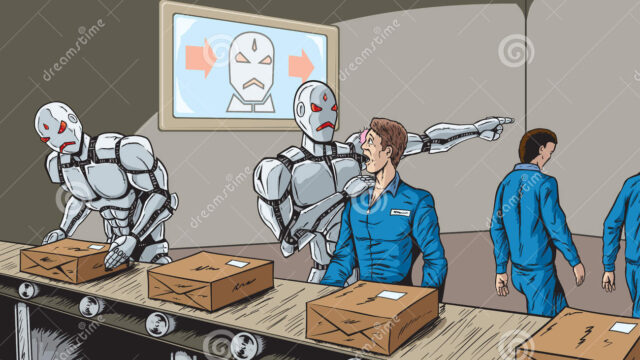

I am not against AI in its entirety. To the degree that it automates mechanical processes, it is useful. For example, generating and organizing transcripts, or scripting robots to trundle around a warehouse to identify various items and place them in a shipping box. These are easy-to-formalize processes, not thinking.

I know a very few world-wise individuals with exceptionally broad life experience and expertise who have insightfully queried AI tools. But these individuals likely represent 1% of the general populace.

The problem is it’s easy to mistake mechanical processes for thought.

The reason I don’t use AI is the many ways it degrades our ability to think deeply and independently. This degradation occurs on multiple levels.

I’ve read rather widely on AI since the mid-1980s, when “expert systems” were all the rage. More recently, I’ve written 21 essays on AI this year. If you read them all, you’ll have a good idea of the issues I see as consequential.

The core problem with AI is it’s presented as thinking for you but it doesn’t do any actual thinking in the manner of human thought, where we apply both our intuitive right hemisphere and our rational, reductionist left hemisphere to thinking through an issue, problem or new idea on our own. Much of our creative thinking occurs while we’re asleep.

As a modest example, a melody came to me in a dream. It’s not a great melody but it demonstrates the point: our mind works on multiple levels during sleep, integrating what we’ve learned in the past with what we’re learning now.

AI generates summaries, not knowledge. As for the claim that AI is equivalent to a human PhD, consider this: if a PhD composes a brief summary of a topic for us, does that give us a PhD-level grasp of the topic? No. A brief summary is not knowledge, any more than a recipe is the equivalent of actually knowing how to cook.

Summaries create an illusion of knowledge, but they aren’t actual knowledge or understanding. AI can generate a summary of Capitalism, for example, but that’s neither knowledge nor understanding–it’s a superficial gloss.

Someone seeking a real understanding of Capitalism would do well to start by reading all three volumes of Fernand Braudel’s Civilization and Capitalism, 15th-18th Century: Vol. 1: The Structure of Everyday Life, Vol. 2: The Wheels of Commerce and Vol. 3: The Perspective of the World.

Once we have an understanding of the development of Capitalism, then we can add readings of Marx, Hayek, Polanyi and so on, building on the foundation established by Braudel’s trilogy.

There is no substitute for reading in depth and thinking through the material on our own. This process cannot be done for us by AI; to learn, we must do the learning ourselves, which takes both hemispheres of our mind and the sustained application of independent thinking.

If the goal is to parrot knowledge and mimic understanding using superficial glosses, then AI can do that. If the goal is to gain a working knowledge and understanding, then we have to do all the work ourselves. Studies have already found that AI functions as intellectual smack: once we start using it, we become dependent on its summaries and answers to do our thinking for us.

Not only do we become dependent on instant answers, we also lose the ability to detect AI’s errors and subtle editing / interpretation of complex topics. We “know everything” because an instant answer is always at our fingertips but we understand nothing, and are easily misled because we no longer have an independent ability to assess the accuracy or hidden editing of AI’s “answers.”

https://charleshughsmith.substack.com/p/anatomy-of-ai-cold-turkey