Some Reflections on AI

Translated from the Swedish and originally published here.

Part 1: Understanding without Using Language

Why do we even talk about artificial intelligence? Why do we say algorithms learn? Why do we claim we communicate with computers? What makes these metaphors so compelling that we don’t see them as such and question them?

A computer program cannot be intelligent. Or stupid, for that matter. It can be good or bad at performing a task. A computer program cannot learn things. It can have a more or less flexible design. It can be more or less efficient and versatile.

The one who solves problems is intelligent. Therefore, is a technology that can solve problems intelligent? Solving problems is certainly a sign of intelligence. One of the clearest and most unequivocal. The harder a problem is, the more intelligent the one who solves it, we have reason to believe.

Using signs and language is another proof of intelligence. A use of signs that enables rational communication is a kind of confirmation of the sign user’s intelligence. When we search for (easily applicable) criteria for intelligence, it’s hard to come up with anything better than this.

Therefore, when confronted with language-using problem solvers, we are spontaneously inclined to believe we are dealing with an intelligent phenomenon. This, incidentally, is an example of an intelligent reaction.

But intelligence is neither a matter of solving the problem nor of the steps between problem formulation and solution proposal. To the extent a problem can be formalized, i.e., expressed using a limited set of unambiguous and interpretation-independent signs whose use is governed by a number of sentence formation rules and inference rules, solving it can in principle always be automated, i.e., decoupled from conscious/comprehension-driven thinking, and performed by, for example, a computer. In principle, there is always an algorithm (a sequence of operations) that can lead from a problem formulated in a formal language to a solution formulated in the same language. Digitization and computer technology are a testament to this.

Language is an incomplete and misleading criterion for intelligence. (Isn’t it the child’s intelligence that allows it to learn a language?) This is partly because many other, more important forms of intelligence exist, and partly because the use of signs does not necessarily indicate intelligence. Moreover, this criterion has the negative consequence of making it harder for us to understand the existence and behavior of creatures that do not seem to use any language or do not communicate in a way comparable to our own. It unnecessarily increases the differences between us and other living beings.

Not everything is a problem in the sense we usually mean by the word, i.e., handling a task whose solution we don’t have when faced with it. However, it is possible that many “unproblematic” activities could also be described as problems with a little effort. In any case: intelligence consists in the way a problem is perceived, i.e., in the nature of the relationship between the problem and the problem solver. Intelligence consists more precisely in the problem being understood, in it being understood, firstly, as such, and secondly, as a problem of a specific kind, understood as something that demands a solution with greater or lesser insistence. A problem presupposes, in other words, problem awareness and a solution-oriented mentality. For this, as for psychological conditions in general, there is no formalized language and no algorithm.

Intelligence is understanding. To understand something is to use one’s intelligence. To be intelligent is to have (a good) capacity for understanding. So what, then, is understanding? This question should be answered in a way not limited to humans and human circumstances.

The elementary prerequisite for something to be understood or, put differently, for it to be relevant to describe a cognitive act as understanding is: (1) that there are two qualitatively different information-processing levels, and (2) that it is possible in some way to move from one level to the other. To understand (something) is to take this step. The one who takes this step is consequently someone who understands.

The reason digital systems — systems based on and working with purely formal signs and sign rules — cannot take this step is that for them there is only one level: the digital one. This is not a shortcoming of these systems. The key to their usefulness and efficiency lies, on the contrary, in the fact that they can handle tasks and solve problems without, like humans, being forced to understand them. By working at one and the same level all the time, significant gains can be made in terms of computational capacity and speed. Problem-solving will concern the design of the algorithms and the architecture and capacity of the system that processes the digital signs. If it proves possible to write algorithms that in turn can write new and better algorithms, and if among these algorithms there will be some that can contribute to improving the data flow itself (the computer’s construction and capacity), there are no theoretical limits to this development.1

A simple yet important example of understanding is seeing something or, more precisely, seeing something as something (specific). Seeing a tree, a cat, the clouds in the sky, or anything at all is, in other words, an example of intelligence. What problem is being solved here? One could argue it’s an identification problem. But doesn’t it often happen in such contexts that the solution comes before the problem? We don’t ask, “What is that speckled thing moving over there?” but instead say, “There’s the neighbor’s Bengal cat again.”

Since a creature needs to see or otherwise perceive what is in its immediate surroundings to move successfully and must be able to register the movement possibilities and obstacles between itself and its destination, moving between two points in space is another important example of intelligence.

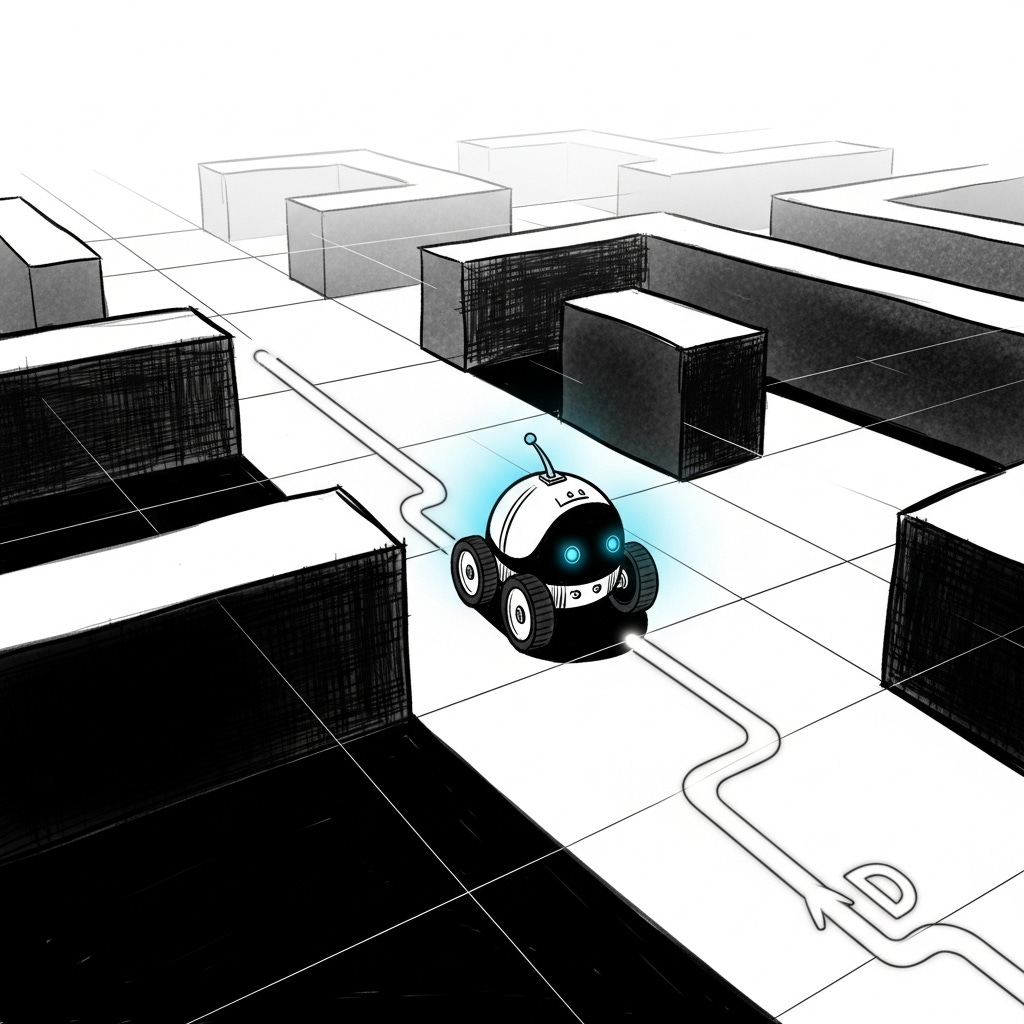

The Story of the Rolling Robot

A mobile robot equipped with a navigation program and sensors to register its surroundings uses the sensors to digitize the three-dimensional space into a one-dimensional one and then uses its algorithms to solve the task of moving on this surface. While the little robot rolls forward between obstacles, the computer program does not move anywhere. It moves as little as if it played Go or Minecraft. But aren’t we dealing here with two qualitatively distinct levels and a step from one to the other? The answer is no. For the computer program to perform its calculations, to transform input into output according to its algorithms, the input data must already be in digital form from the start. The sensors do not see like an eye interacting with an interpreting and judging brain. Their information is not first electrochemical to then become conceptual and imagistic/representational. They digitize in interaction with an equally digital computer program. For the robot’s computer program, therefore, only the one-dimensional digital map’s Flatland exists. Its tasks are defined and performed entirely within the framework of this Flatland. The computer program is constructed to process and organize the sensors’ digital data in a specific way, not to interpret and evaluate them, and it therefore cannot misinterpret them or make mistakes. (When an element of interpretation is part of a process, the result is never entirely given and can differ from case to case. An interpretation is always underdetermined. But this also has the advantage that an interpretative process can handle incomplete and partially incorrect information.) A computer program equipped with feedback mechanisms that allow it to supplement and correct its digitizations of three-dimensional space and make them progressively better in relation to its instructions, e.g., to get from point A to point B in the shortest possible time without colliding with anything, however fascinating this mechanism may be and however great its development potential, is not an example of interpretation and learning. What happens here is that one one-dimensional plane is replaced by another with a partially different design and resolution because the algorithm has identified new (and better) solution possibilities on the new, information-richer plane. Interpretation implies the opposite of replacing one reality plane with another, and a learning process stays within the same world the whole time. Since the computer program only “understands” digital language, the independent three-dimensional space does not exist for it. The computer program, so to speak, only sees itself. The flow of input from the sensors and output to the movement mechanisms exists only in and through this program.

As the above examples suggest, intelligence is not something reserved for humans or the more advanced animals from a consciousness perspective, such as chimpanzees, corvids, and dolphins. The examples are chosen deliberately to highlight this. Intelligence in humans and other creatures (plants as well as animals, invertebrates as well as vertebrates) is not a question of either/or but of degrees and different forms. There are creatures in nature possessing intellectual abilities that humans lack. Assuming bees have intelligence makes it easier for us to explain their behavior. New discoveries of communication methods and cooperation techniques among trees provide possible evidence that trees also possess intelligence.2 It is undoubtedly more interesting and rewarding, albeit morally and emotionally more demanding, to live among intelligent beings in an intelligent world than in a mute and mechanical one. We must relate differently to an insect or plant that can feel and react than to one consisting solely of passive or automatic life processes. It is surprising that advanced scientific research is required for us to discover and understand things that people in technically and socio-economically far less advanced societies have known for a long time.

If visual and movement abilities seem too simple to be interesting in this context, it is because these forms of intelligence are so common that we take them for granted and don’t think of them as such. We have a tendency to perceive intelligence as something exclusive. It is easier for us to exploit plants and animals as we wish if they lack intelligence or if their intelligence is comparatively insignificant. But the narrower our conception of intelligence, the harder it becomes for us to understand it and explain its prerequisites.

The basic example of intelligence is when a creature registers something as something in its environment, e.g., as a certain color or shape or as an object with a certain color and shape. This way of registering something implies that the registered thing belongs to an external world, i.e., a world independent of the creature registering it or, more precisely, of the registration process itself. When the creature registers something in this way, it has understood something. (Which is not the same as having understood it correctly, best, or even at all. It could be an illusion. When I speak of understanding here, I refer to a process of a specific nature, not its result or the qualities of that result.) To register a thing in this way is to understand it — to understand first and foremost that it is, to understand how it is, to understand what it is.3

The prerequisites for understanding lie ultimately in the qualitative difference between a creature’s perceptual system and the world perceived by that system. A creature’s intelligence consists primarily in its ability to bridge this qualitative difference by producing, within its perceptual system, a representation of (some part of) the external world, i.e., in producing, on a qualitatively different level, a representation of something whose original form exists on another qualitative level. It is the nature of this process that justifies calling its result understanding something. Secondarily, the creature’s intelligence consists in the qualities of this representation, e.g., how quickly it can be produced, how well it corresponds to conditions in the external world, how practically useful it is, how easily it can be communicated…

And what is the point of this translation work? What function does understanding serve? The evolutionary justification for understanding consists in it being a prerequisite for every more complex life form, i.e., for organisms constituting an integrated functional whole of several different interacting systems, because a creature of this kind, to interact with its external world and thus be able to exist, must have the capacity to perceive it on a level corresponding to its own highest level of complexity — a level always lying more or less above the atomic or molecular level, i.e., the level natural science considers the building block level of existence and sometimes, in its more materialistic moments, claims is the only really existing level. This applies at least to all creatures with a central nervous system. Understanding, in some form, is thus an integrated part of such a system. Conversely, understanding can be an unnecessary cost and thus a burden for creatures lacking a nervous system. If one wants to construct a genuinely intelligent computer, one must, in other words, construct a computer with a nervous system. But then one must also be aware that it will be fallible and can make mistakes. Because a step between qualitatively different levels can always be taken in more than one way.

Humans and many other creatures possess intelligence, of different forms and sizes, and can understand things. Before the breakthrough of natural science, this was an intuitive conviction based on our experiences of ourselves. Today it is also evident from what we know about the physical nature of the world and how our brain and nervous system are structured and function. Through this knowledge, we know that nature, including our own physical basis, differs significantly from our way of experiencing and understanding it in conscious form. The world in itself or outside the circle of consciousness consists, according to physics theories, not of trees, clouds, and cats and similar things, but of (quality-less) associations of atoms and molecules and various energies and forces bound to them. With the help of the electrochemical systems in our brain (our nervous system), we perceive the parts of this world we come into contact with (and are evolutionarily conditioned to regard as relevant) and transform and reinterpret their physical impact on us into mental states in the form of qualitative properties of various kinds, such as colors, tastes, and sounds, as hard and soft, warm and cold. While the mental world substantially constitutes an understanding of the physical world, the two worlds thus differ in a rather dramatic way.

It is important to insert here that the mental world, any individual mental world, is not the only possible interpretation of the physical external world. An individual mental world, any one, is never the only possible interpretation of the physical external world that the individual interacts with. This follows from the qualitative differences between them. A mental world doesn’t even need to be a particularly good or adequate interpretation of the physical world, as is the case for mentally ill or otherwise intellectually impaired individuals. The single most important knowledge about consciousness or the psyche is that it constitutes a constant ongoing interpretation of something independent of itself and that the result of this interpretive process is one of several possible. Dogmatism — political, religious, scientific — constitutes one of the human intellect’s most peculiar phenomena because it is a denial of this faculty’s fundamentally dynamic nature.

What makes humans and similar creatures capable of understanding is that the physical makeup of a human’s perceptual organs does not differ in kind from the world those organs perceive; rather, they exist on the same qualitative level. Thus, it is with the help of a system of the same qualitative kind as the rest of the world (= the body as natural science describes it) that humans are able to produce a qualitatively new level: a level of understanding consisting of discrete meaning-bearing parts interacting with each other by virtue of their meaning/content. In our experience, this is exemplified most clearly by our consciousness, and within our consciousness perhaps most clearly by language. Consciousness as such constitutes a form of understanding.4 However, we must not identify intelligence and understanding with consciousness. Conscious processes constitute only a smaller part of understanding processes.

There are “materialists” who want to reduce consciousness and its activities to the physical substrate, i.e., to the electrochemical structures and processes of the brain. Their main reason is (presumably) that they believe the “immaterial” consciousness lacks causal power and therefore cannot influence the body. Consciousness thus lacks the ability to explain anything. To the extent they are empiricists, i.e., claim that human knowledge must start from experience and be tested against it, this reasoning is surprising, because all our experiences constitute the content of our consciousness and are thus conscious in form. If one wants to build one’s theories on experience, one cannot reduce one’s experiential basis to components in the theories formed on this basis. Such a reduction deprives the theory of its independent empirical foundation and transforms it into theoretical speculation or, in other words, into philosophy. Such speculation weighs no more in itself than any other.

Every organizational level of a functional whole possesses a certain degree of autonomy relative to the levels it builds upon. This applies to social organizations as well as logical systems and biological beings. The superordinate or higher level is of course dependent on the underlying simpler levels and influenced by the activity taking place on them, but it simultaneously, by virtue of its own organization, unique and more general within the whole, and the functions linked to it, possesses a capacity for independent activity and self-governance. By governing itself, the superordinate level thereby also governs all the subordinate levels. The highest organizational level of a functional whole will, by governing itself, govern the whole of which it constitutes the overarching organizational level. This is what we describe in humans as free will and capacity to choose.5

The relationship between a digital algorithm (a computer program) and the binary signs and physical structures (processor and memory, etc.) it builds upon is an illustrative example of this. The algorithm constitutes the operative level. It determines how the binary signs are to be combined and which operations are to be performed. But the algorithm’s way of functioning is in turn conditioned by the binary language and its possibilities, and its computational capacity is limited by the architecture and capacity of the physical structures.

Another example of the same relationship is human consciousness or, more precisely, human understanding (= conscious + unconscious intelligence activities). Human understanding represents a new organizational level relative to the electrochemical systems in the brain. Since understanding and the nervous system constitute parts of the same functional whole (= the human body) and since understanding constitutes the superordinate system, it can, by virtue of its operative capacity, govern the nervous system and the rest of the body. Since understanding and the other systems constitute an integrated whole, this operative capacity is neither unconditional nor unlimited. Our experiences show us, on the contrary, that it has many conditions and limitations. (Otherwise, we wouldn’t be dealing with an integrated functional whole.) Nevertheless, understanding constitutes an operative level with autonomous capacity. If this were not the case, we would face the surprising fact that a biological organism in a protracted and costly evolutionary process managed to develop an advanced, energy-demanding organ that serves no practical function! However, the operative capacity of understanding, as well as its many different functions, is already evident from consciousness itself. We experience that we govern ourselves with the help of our understanding. This experience of conscious governance has certainly been questioned, partly based on the aforementioned limitations it is subject to, but our daily experiences of understanding’s operative capacity are as strong an argument as our experience-based conviction of the existence of the external world and other people.6 These two convictions lie on the same logical level. Those who hold an opposing view must consequently produce extremely strong counterarguments. The presumption is on our side. But we are not dogmatic! Experience has taught us the opposite. If our opponents can show that we live in an illusion, without thereby simultaneously showing that they themselves do, we are prepared to reconsider our view.

Since we, with our understanding and our consciousness, can govern both (many of) our thoughts and (many of) the actions and movements we perform with our body, we can thus also govern the subordinate operative levels that understanding’s superordinate operative system builds upon and is dependent on for its components and structure, i.e., we can govern the electrochemical structures and processes of the nervous system. This conclusion, as far as I can see, does not quite align with the viewpoints and theories dominating science and philosophy, but that understanding can govern the nervous system and in many respects also does is actually as self-evident as that algorithms can govern the operations in a computer. By virtue of its meaning-bearing units and their relations having operative capacity, understanding also has the capacity to govern its non-meaning-bearing physical substrate. And just as algorithms do not govern everything that happens in a computer, understanding does not govern everything that happens in our body or even in our brain. The basic prerequisite for understanding’s governing capacity lies, just as for algorithms, in it being of limited scope and concerning specific tasks.7 If this understanding of understanding’s and consciousness’s causal capacity contradicts science’s and philosophy’s view of causality, then it is the latter that is too narrow or incorrect.8

A parenthesis: The discovery that conscious activity seems to be preceded by neural activity, i.e., that the brain appears to think before we become conscious of our thoughts, can be used as an argument against the idea of consciousness’s operative capacity. This discovery is, firstly, not an argument against the operative capacity of comprehension-driven thinking, since far from all comprehension-driven thinking is conscious. Secondly, as Pascal already pointed out, all intellectual operations, conscious as well as unconscious, are based on memory. We must constantly remember things, many things, to be conscious, but we cannot be conscious of all these memories simultaneously, for then we could not think and reason consciously. Beneath every conscious thought, therefore, there are many unconscious ones. We quite often get a feeling that we know what we are going to say, what the next step in an argument is, just before we say it. If our thought chains only encompassed what we were conscious of, they simply wouldn’t hold together and be able to perform their tasks. This observation also helps us realize that what we are not conscious of at time T1 but will become conscious of at T2 must be of the same quality as what we are conscious of at T1 for us to become conscious of it at T2 and for it to interact with the conscious state at T1 and form a thought chain coherent with T1. The prerequisite for consciously remembering one thing is that we beforehand and simultaneously remember many other things unconsciously. It is entirely obvious that neither reasoning thought nor memory could function if the comprehension processes were limited to what is currently present in consciousness. It is entirely possible that our conscious thinking at any given time only constitutes a small part (the proverbial tip of the iceberg) of the comprehension-driven thought processes, i.e., that the greater part of our representation-bound and representation-governed thinking is always unconscious. The discovery of structural correspondences between preconscious brain activity and subsequent conscious activity actually contributes to explaining how we can work consciously and thus how consciousness can have operative capacity.

Jonas Åberg is a trained librarian and a PhD candidate in philosophy. He has over a decade of experience working in libraries and writes from a deeply rooted interest in philosophy and politics, approaching the former with passion and the latter with critical distance.

See Situational Awareness: The Decade Ahead by Leonard Aschenbrenner.

Peter Wholleben, The Hidden Life of Trees: What They Feel, How They Communicate — Discoveries from a Secret World (The Mysteries of Nature 1)

To understand is not to reproduce or copy something. Regardless of what intelligence produces — whether true or false, useful or useless — it always represents something qualitatively new or different in relation to its physical basis.

The two qualitative levels, and the interaction between them, are also the key to consciousness and an understanding of what it is. Consciousness itself represents understanding. In every respect, it constitutes a level of understanding. Every clear and distinct content of consciousness represents an understanding of something. Even dreams? Absolutely — the concepts of dreams are no different from those of wakefulness. How else could we remember our dreams and describe what we experienced in them?

The functional wholes we call living organisms consist of a hierarchy of organizational levels, where the more complex and general levels build upon the simpler and more localized ones. These organizational levels interact with one another within the framework of the functional whole they collectively form and constitute the internal preconditions for. The nervous system is an example of a system at a high organizational level. In many creatures, it likely represents the highest organizational level.

In some beings, such as humans, the nervous system has developed the ability to register the external world in the form of representations. These representations can interact with one another by virtue of the fact that they (1) persist over time, (2) constitute discrete units, and (3) possess meaning or content — that is, they serve as signs for something. Through the interaction of these elements, a new kind of operative level becomes possible. For simplicity, we may call it consciousness.

It is in these meaning-bearing representations and their content-driven interactions that the qualitative difference lies between a conscious being’s perceptual system and that which is perceived (the world). Intelligence, or understanding, is thus representational in nature, and the purpose of its activity is to generate a meaningful and meaning-governed world upon a physical base that lacks these qualities. Yet this veil of meaning is no less true and real than the unveiled reality concealed beneath it. In fact, concepts such as truth and reality only find application at the level of consciousness.

Consciousness, therefore, constitutes not only the highest organizational level but also the highest level of reality. From this perspective, the existence of a divine intelligence and a divine creator does not appear as an absurdity — it is a logical possibility. On logical grounds, the world of a divine intelligence could represent a reality at an even higher level than human consciousness. We would struggle to perceive an intelligence as divine if it did not represent a reality superior to our own.

The experiences we have of failing to govern ourselves — of being unable to control our emotions, of not understanding why we act as we do, or of failing to fulfill our intentions — are in fact further evidence that consciousness constitutes an operative level. It is through these difficulties and failures just as much as through our successes that we become aware of our intellect’s operative capacity and the possibilities it encompasses.

The capacity of understanding to govern our emotions is severely limited and indirect. Nor can it determine the nature of our sensory impressions, though it has various means of controlling what we experience — such as averting our gaze or closing our eyes — and through concentration can make our experiences clearer and more detailed. It exerts significant, though far from complete, influence over our thinking and reasoning. It can (if willing and making the effort) determine nearly completely what we say, and to an even greater degree what we write.

The difficulty in accepting consciousness’s causal capacity likely stems from how the qualitative differences between the body (the nervous system) and consciousness have come to be defined in terms of the material and immaterial. These two concepts and the categorical distinction they create obstruct many insights. How “material” is organic life, really? Isn’t there a significant difference between a single-celled organism and a molecule?

The fact that we can explain the processes of organic life without invoking any Bergsonian “vital force” does not mean that our theories aren’t, in essence, explaining this very life force. Even the “materiality” of the smallest known building blocks can be called into question. How material are electrons and the binding forces within atoms? The forces operating at the macro-level of physics raise the same questions.

It would be better — and more instructive — if we could approach the phenomena of the world in a more presuppositionless way, that is, in a more philosophical and less dogmatic manner, striving as far as possible to describe each thing as it actually is, without relying on superficial and generalized concepts like “material” and “immaterial.” These concepts do little to deepen our understanding. Their appeal lies in their ability to give us the illusion of comprehension. Once we characterize something using these terms, we feel we have thereby understood it. When we assign a name to something, it appears less foreign to us.

https://www.arktosjournal.com/p/some-reflections-on-ai-part-1